Highlights

Aurora: Payment Acquiring Solution with CockroachDB on Kubernetes

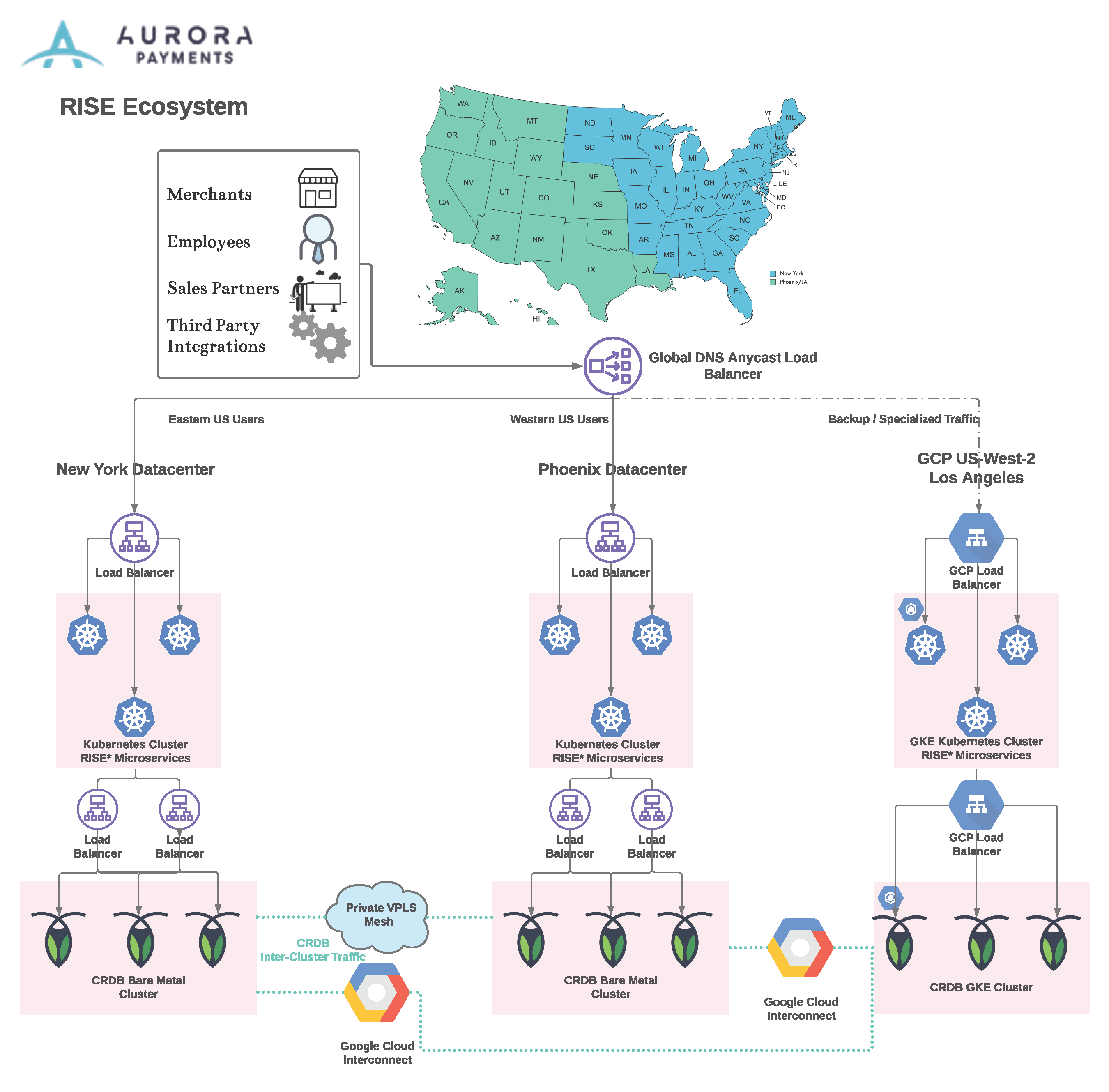

Aurora is the company that handles credit card payments. Such transactions should work all the time, be consistent and scalable. That’s why they migrate from PostgreSQL to CockroachDB: eventual consistency is not an option in this business. CockroachDB guarantees serializable transactions. This blog post describes higher level architecture of the solution. Tech stack: .NET Core C#, ReactJS, CockroachDB.

Key takeaways. Hybryd-Cloud: Google Cloud + 2 co-location, never be 100% cloud or 100% private, hence no vendor lock, better availability if certain cloud provider goes down. Easy to migrate from PostgreSQL as CockroachDB is wire-compatible. Request hits the nearest datacenter with DNS multicast.

Mozilla: How MDN’s site-search works

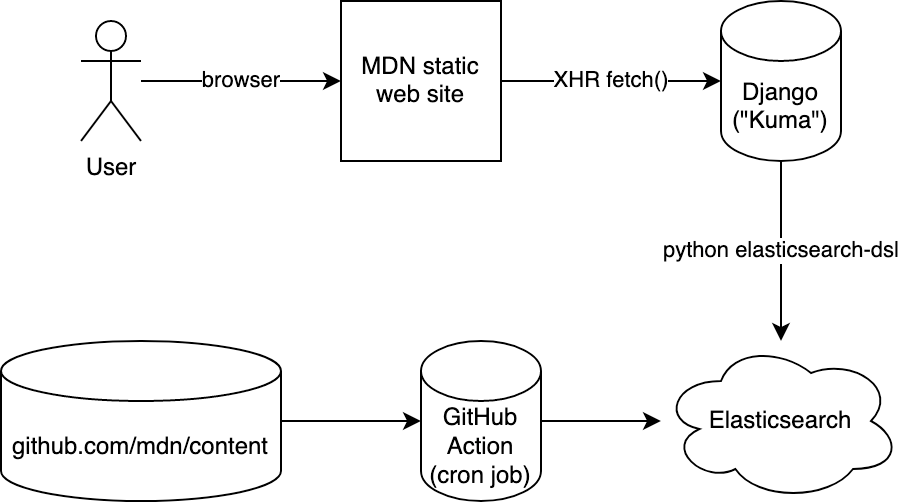

Static sites built with site generators are called “Jamstack”. MDN is one of those sites. The only issue with such sites is search. Elasticsearch is the database that is good for full-text search.

While building static HTML pages, build process also creates JSON pages with basically the same content. Python script then removes all HTML markup from those JSON files and adds information to the search index. The build process is run on a daily basis. Search index is being deleted on every iteration and recreated from scratch. Search is not fully available at the time of recreating index.

Django server converts search requests into Elasticsearch API format. Each page has it “popularity” value which is based on Google Analytics pageviews. This “popularity” value combined with score from Elasticsearch defines the order of search results. In addition, there is Elasticsearch boosting API that adds extra points if the search phrase was found in a title, for example.

The search results page is a blank HTML page that makes an async XHR request to search API.

Cockroach Labs: From Batch to Streaming Data: Real Time Monitoring with Snowflake, Looker, and CockroachDB

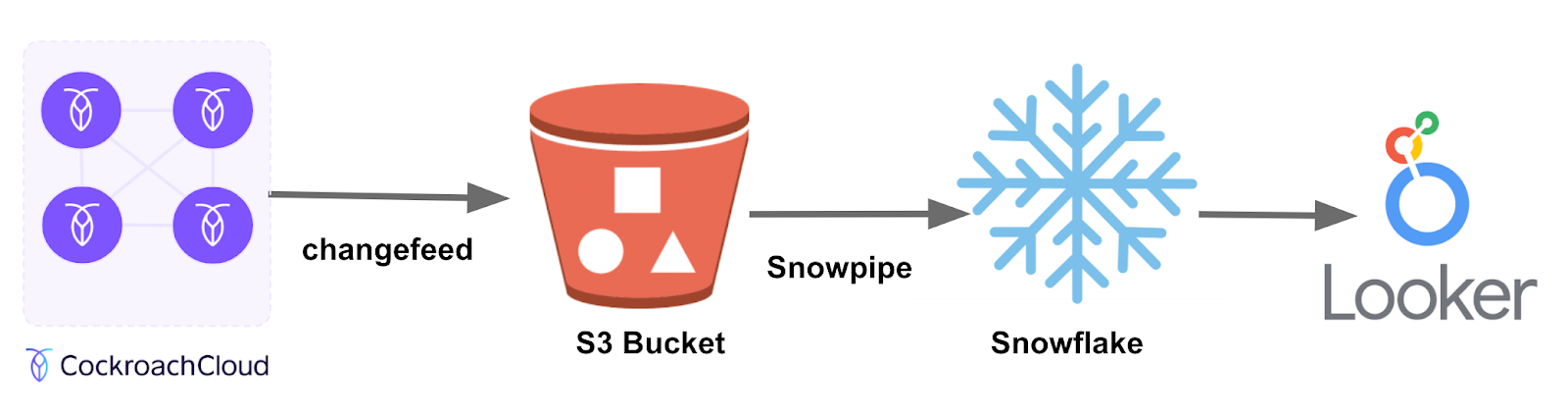

Cockroach Labs previously used batch processing to analyze telemetry data. Originally it was implemented with weekly cron job exports to Snowflake. It lacked flexibility, also relied on manual work for extra runs.

Change data capture (CDC) feature allows to watch changes in data, then stream updates in near real-time to various sources, like Kafka. This is useful with microservices, also for keeping audit logs. With CDC, they load data to S3, then from S3 to Snowflake using Snowpipe. Looker, business intelligence tool, draws nice graphs out of the resulting data.

DoorDash: Rebuilding our Pricing Framework for Better Auditability, Observability, and Price Integrity

DoorDash had a pricing logic contained within a large monolith application. Its implementation was spread across the codebase, contained technical debt. In addition, they wanted to achieve high reliability, ensure integrity, etc.

They’ve decided to extract this logic to a separate pricing service. Each request initiates a pipeline: initialize context (get user, cart data, etc.), fetch item prices, delivery fees, etc, final price calculation (for example, calculate tax), construct the response, validate and persist response.

Roll out of this microservice was made in parallel with the existing monolith. They’ve had a job that compared prices from both system to ensure that results are consistent. As a result of the migration, p95 latency decreased by 60% for the pricing endpoint.

To ensure that the customer was charged the right amount, they used Redis to implement price lock. All the audit information is persisted for audit, monitoring, and debugging.

Etsy: How We Built A Context-Specific Bidding System for Etsy Ads

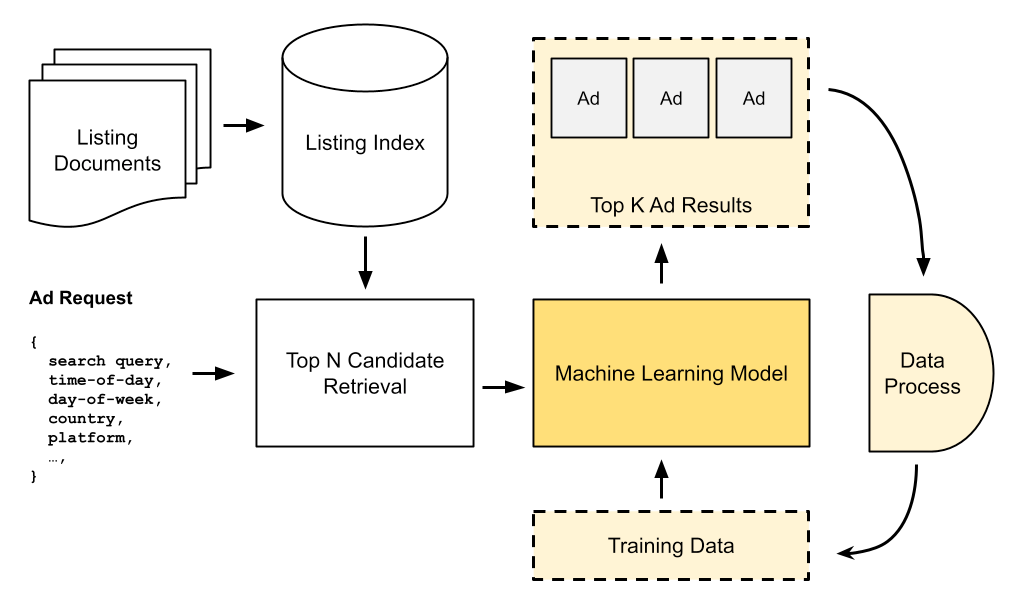

Etsy sellers can make bids to show their ads on the platform search. The majority of sellers don’t adjust their bids professionally. Etsy needed a system to improve ads performance. They noticed that ads perform differently depending on the context, hence the name - contextual bidding.

New system takes a lot of parameters into account: search relevance, time of day, day of week, platform, etc. They trained a machine learning model to predict rankings. On each search, system matches candidate ads and predicts post-click conversion rate. They also take into account listing’s value to make a decision whether to bid or not. Clicked ads data is fed back to a training set.

Article describes some details about ML model, increase in CTR (click through rate) and ROAS (return on ad spent).

System Design

Traffic Shedding, Rate Limiting, Backpressure, Oh My!

Automating Blue/Green Deployments for ECS Fargate with AWS CodeDeploy

Best Practices for Regression-free Machine Learning Model Migrations

Rethinking site capacity projections with Capacity Analyzer

Software Architecture

Detecting memory leaks in Android applications

How GitHub Actions renders large-scale logs

Product News

DigitalOcean becomes a public company

Amazon DynamoDB now supports audit logging and monitoring using AWS CloudTrail

Announcing HashiCorp Vault 1.7

Grafana 7.5 released: Loki alerting and label browser for logs, next-generation pie chart, and more!

What’s new in Kibana 7.12: Manage long-running searches in the background