Highlights

Uber: Handling Flaky Unit Tests in Java

While the headline mentions Java, this experience is language-agnostic and can be helpful with any other programming language.

Uber team has moved all their repositories to a single monolithic repository. This move helps to better manage dependencies, testing infrastructure, build systems, static analysis tooling. Although individual repos had stable tests, after merging to a monorepo there were lots of flaky tests. Why did it happen?

A unit test is considered flaky if it returns different results (pass or fail) on any two executions, without any underlying changes to the source code. Uber team decided to classify all tests on the main branch with 100 consecutive successful runs as stable, and the remaining tests as flaky.

They’ve built an internal tool called Test Analyzer. It runs tests in the main branch, such tests should be all correct since they are already merged. However, stable tests became flaky in the monorepo because of the far more complex execution environment, and the number of tests being run simultaneously. Tests results are stored in the database with historic test data stored in the data warehouse. Test Analyzer Service exposes data via API to Test Analyzer UI.

Failures associated with flaky tests are ignored when running tests for new code changes. While ignoring flaky tests introduces the risk of creating bugs for the functionality that such test should cover, it’s a deliberate tradeoff to keep the development process.

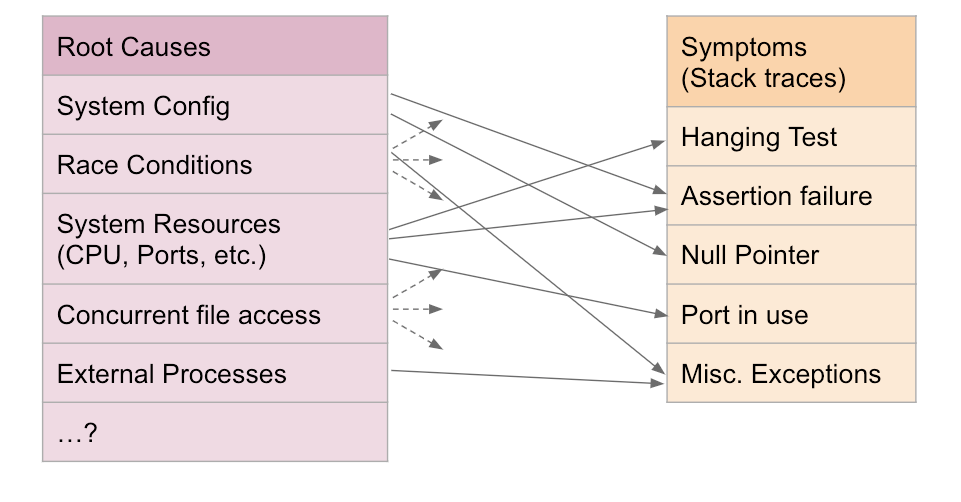

Following are the main reasons for the flaky tests:

- Port collision happens when tests have embedded databases or servers running on a hardcoded port. Running in parallel, such services may find a port unavailable as it’s taken by another test. Some services as Spark have UI enabled by default with a fixed UI port.

- Embedded databases or other services that are initiated at the beginning of tests may have some bugs in the way they are started or stopped.

- Many tests running in parallel may fail due to high CPU or memory contention.

Running databases or other additional services in their own containers helps to prevent issues with ports, as a container is run on a random available port. Spark UI should be disabled for tests.

Running the test method alone without other tests in the class can help in reproducing failures, due to dependencies between the appropriate tests.

To detect tests that use a constant port, a separate process called Port Claimer runs single tests and identifies accessed ports. Port Claimer then acquires the port so the test will fail on the next run.

If the tests have timing dependencies encoded internally - another common source of flakiness -then this flakiness can be immediately reproduced by running the tests under additional load on the node.

The team has implemented customer checkers for the Error Prone framework for build-time static analysis of Java code. These checkers help to prevent the creation of new flaky tests by recognizing known patterns.

Uber engineering has “Fix It Week” events to fix flaky tests. The number of flaky tests was reduced by about 85%. Flaky test runs were removed during CI/CD which led to a better developer experience.

Zendesk: Data store migrations with no downtime

Zendesk migrated their NoSQL DynamoDB database to relational MySQL. There are two flavors of such migration: offline and online.

Offline migration means that applications should stop reading and writing data from the database, effectively going offline. Then all the data is migrated in batches from the old database to the new one. The downtime depends on the size of the data. In many cases, downtime is not acceptable as it means stopping all operations.

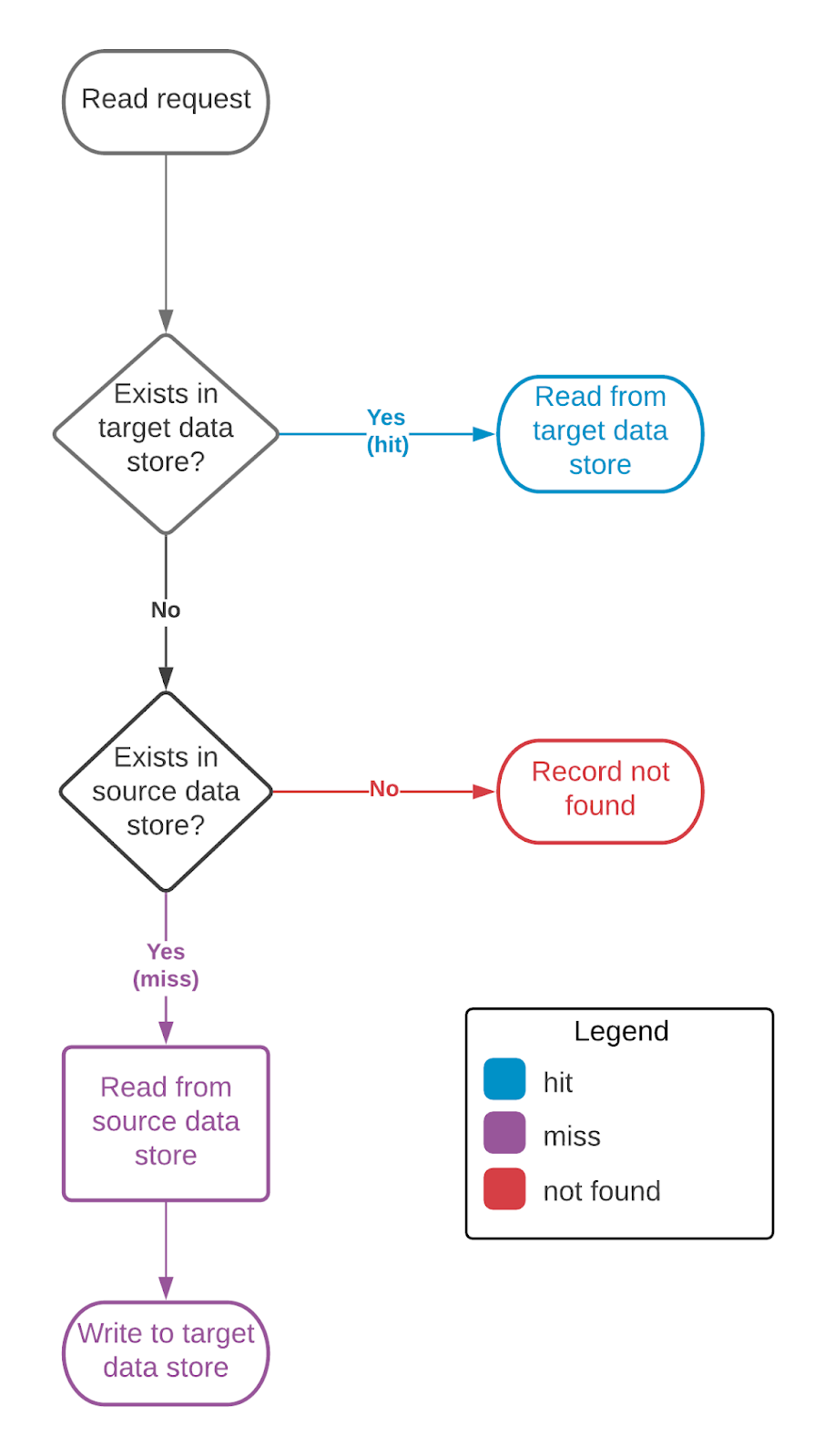

Online migration means that the application is live during the migration. The application attempts to read data from the new database, if it’s not found there, it attempts to read it from the old database. The data is copied from the old database to the new one in case it was found in the old one.

Some records are being rarely accessed, so to speed things up, online migration can be boosted by backfill. This means that in addition to online migration, background batch job still migrates data.

The blog post also provides an interesting approach to monitoring such a migration where hits, misses, and backfill are logged separately and the process can be observed on graphs.

Pinterest: How We Protect Passwords

One of the aspects of protecting passwords is identifying users with compromised credentials.

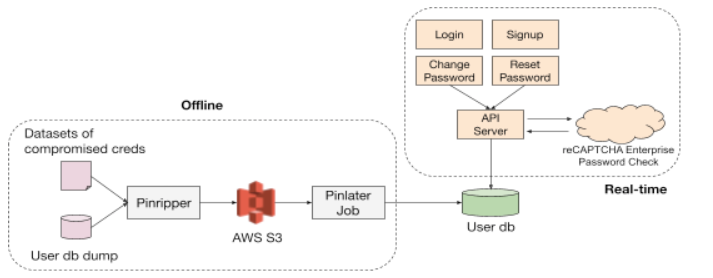

One way of doing that is using Google reCAPTCHA Enterprise Password Check API. It can detect compromised credentials on the fly on user signup, login, password change, or password reset. For example, password change with known compromised credentials can be blocked. This is the real-time method.

The offline method involves using compromised credentials datasets and matching them with the user IDs. The list of matched user accounts is then uploaded to S3. An asynchronous job then flags them for a risk assessment.

If a high-risk user account logs in from an unknown device, we can immediately put the account in protected mode, invalidate all user sessions, and send out an email notification.

Instead of forcing high-risk user accounts to update their password, we can show them a banner asking them to protect the account. A user can choose to change the password or connect with social media account (SSO). If a user chooses SSO, then the Pinterest password is disabled, such users further use their social media accounts to log in.

What Is Passwordless Authentication?

According to Sam Srinivas, Google Cloud Director of Product Management, passwordless authentication usage will grow rapidly. Credentials vulnerability is responsible for 84% of the data breaches as per Verizon’s 2021 Data Breach Investigations Report.

Passwordless authentication is the process of verifying a software user’s identity with something other than a password. Phishing attacks usually aim to steal users’ login and password. Credentials leaked from one website can be successfully used on another website as people often reuse passwords. Removing passwords from the authentication process eliminates the risk.

Here are the most common types of passwordless authentication:

- Biometric. Facial recognition or using fingerprint is widespread on mobile devices.

- Magic Links. A user enters their email and a one-time login link is sent to the email.

- One-Time Passwords or Codes. Similar to magic links but the user receives a one-time password or code to their email. The user should then enter this code into the form.

- Push Notification. User confirms log in with an authentication app on the phone. We can see such an approach while trying to log in to a Google account. Gmail app on the phone prompts to confirm.

System Design

Managing Billions of Data Points: Evolution of Workflow Management at Groupon - Groupon migrated from Cron to Apache Airflow as a workflow automation tool.

Contextual Ads: Scylla at GumGum - another company moved from Cassandra to Scylla Cloud. The cost of licensing and servers for Scylla Cloud was slightly higher than Cassandra, but this included support and cluster management, which they were able to offload from their team, which meant an actual savings of Total Cost of Ownership (TCO).

MLOps — Is it a Buzzword??? Part 1

Text analytics on LinkedIn Talent Insights using Apache Pinot - LinkedIn developed and open-sourced OLAP datastore - Pinot. It’s been handed to Apache. Other products like this are Apache Druid or Clickhouse. This blog post describes the journey of adding a fast text search to this database.

A new Protocol Buffers generator for Go

Product News

Better JSON in Postgres with PostgreSQL 14

In PostgreSQL, the regular JSON field is validated on write and then stored as a string. JSONB field stores compressed data with no preserved whitespaces.

In PostgreSQL 14, there is a new way to query JSON that looks Pythonic:

SELECT *

FROM shirts

WHERE details['attributes']['color'] = '"neon yellow"'

AND details['attributes']['size'] = '"medium"'

Update follows the same format:

UPDATE shirts

SET details['attributes']['color'] = '"neon blue"'

WHERE id = 123;

PostgreSQL 14 is set to be released in Q3 2021. Early beta is already available.

Stripe Identity - Stripe rolls out a new service to verify identity to prevent fraudulent payments.

Lyrid - Multicloud serverless.

Culture

An incomplete list of skills senior engineers need, beyond coding

A Guide to Personal Pronouns and How They’ve Evolved - According to the Oxford English Dictionary, the first record of the so-called “singular they” dates to 1375. Affirming a person’s pronouns demonstrates respect, reduces depression, increases self-esteem, and supports positive regard.